Databricks migration steps

When you need to migrate an old Databricks to a new Databricks, all of the files, jobs, clusters, configurations and dependencies are supposed to move. It is time consuming and also easy to omit some parts. I document the detailed migration steps, and also write several scripts to automatically migrate folders, clusters and jobs.

In this chapter, I will show you how to migrate Databricks.

0. Prepare all scripts

Navigate to https://github.com/xinyeah/Azure-Databricks-migration-tutorial, fork this repository, and download all needed scripts.

1. Install databricks-cli

1 | pip3 install databricks |

2. Set up authentication for two profiles

Set up authentication for two profiles for old databricks and new databricks. This CLI authentication need to done by a personal access token.

2.1 Generate a personal access token.

Here is step by step guide to generate it.

2.2 Copy the generated token and store it as a secret in Azure Key Vault.

On the Key Vault properties pages, select Secrets.

Click on Generate/Import.

On the Create a secret screen choose the following values:

- Upload options: Manual.

- Name:

- Value: paste the generated token here

- Leave the other values to their defaults. Click Create.

2.3 Set up profiles

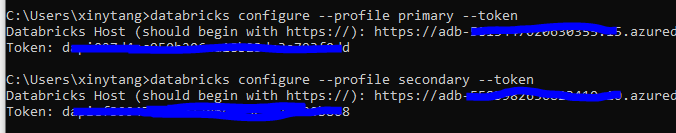

In this case, the profile primary is for the old Databricks, and the profile secondary is for the new one.

1 | databricks configure --profile primary --token |

Every time set up a profile, you need to provide the Databricks host url and the personal access token generated previously.

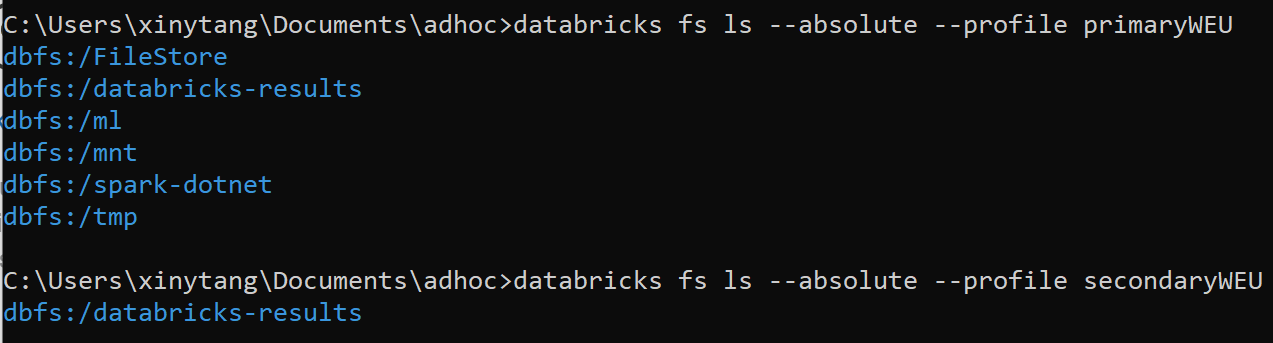

2.4 Validate the profile

1 | databricks fs ls --absolute --profile primary |

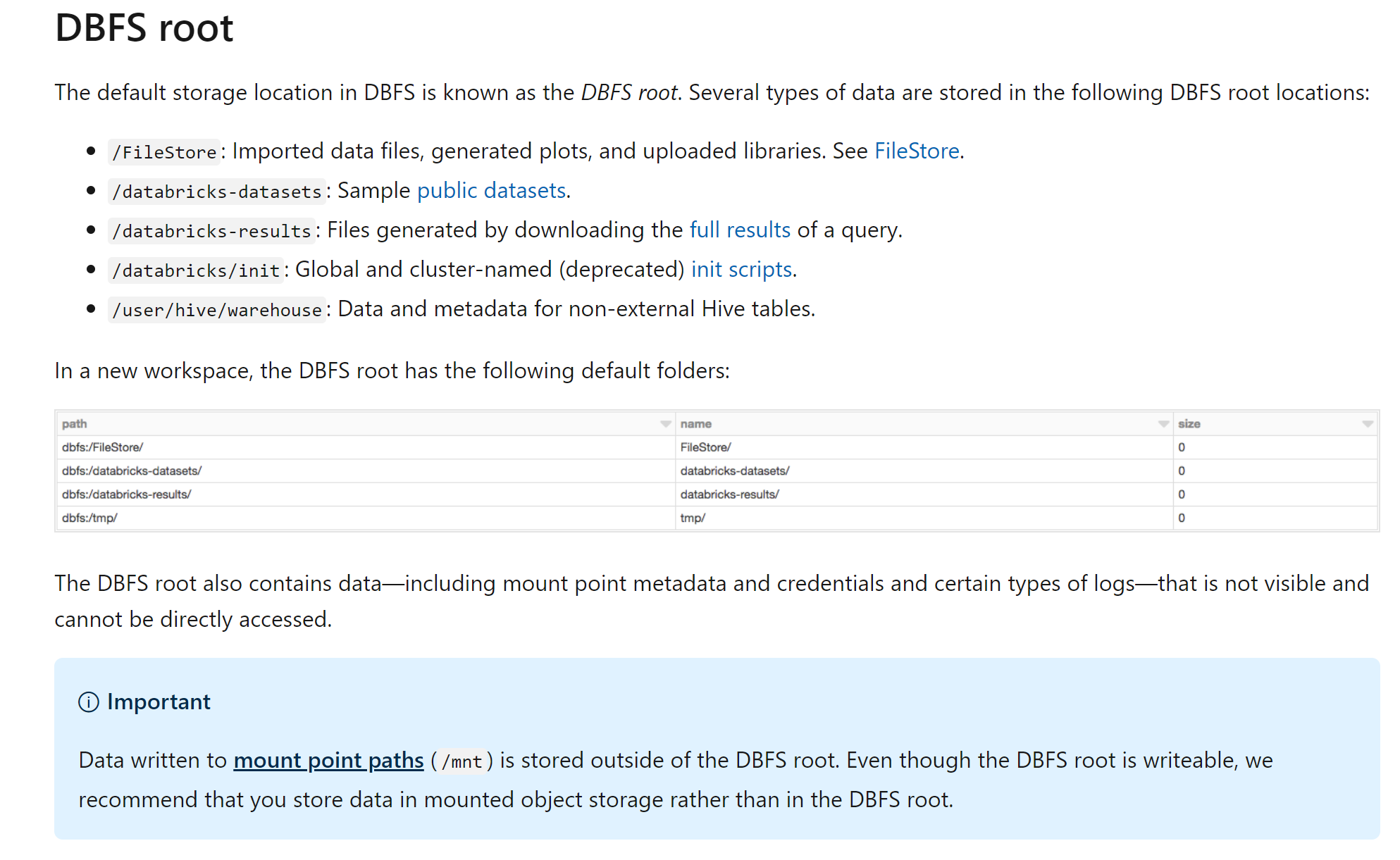

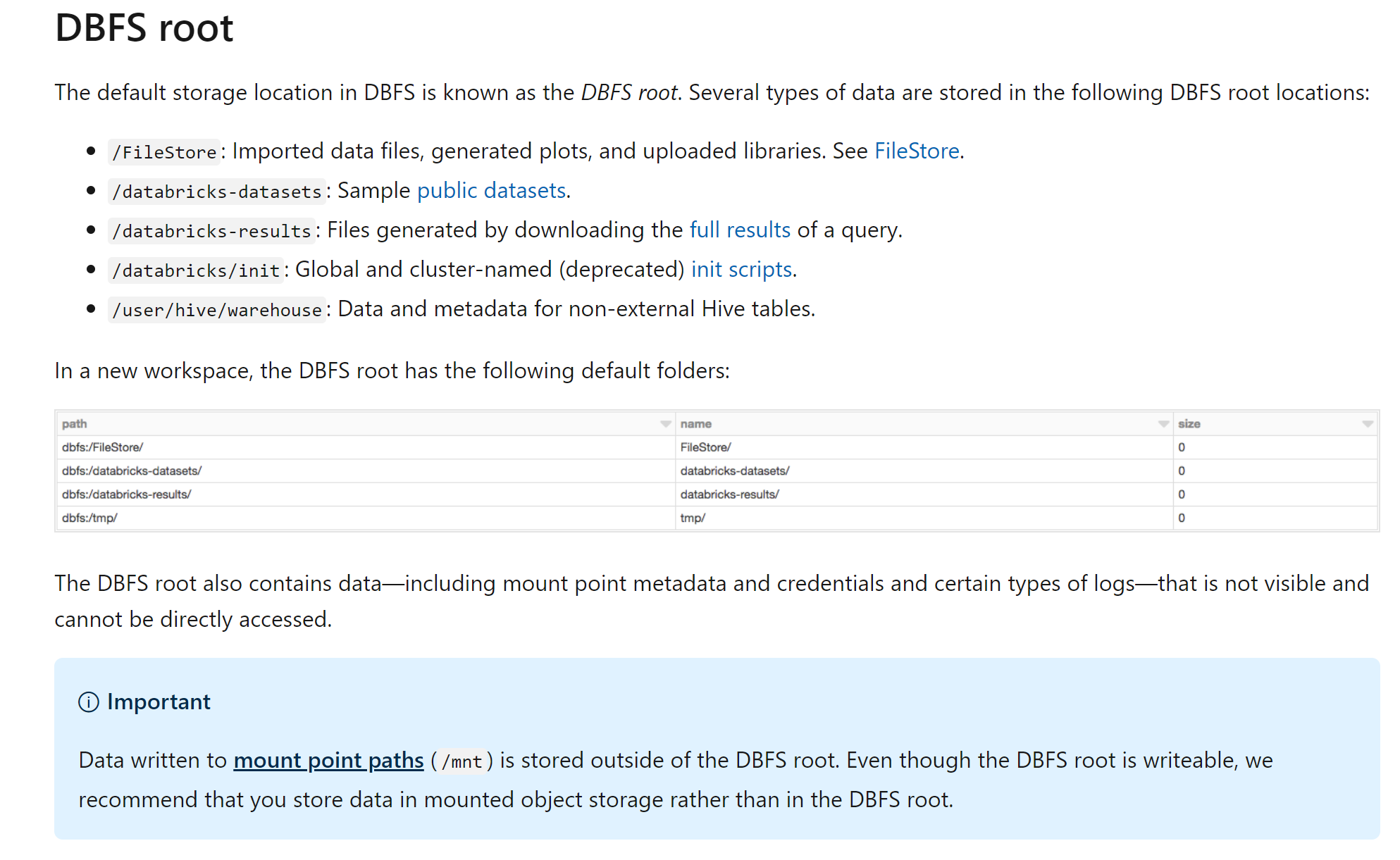

Here is the DBFS root locations from [docs](https://docs.microsoft.com/en-us/azure/databricks/data/databricks-file-system)

Here is the DBFS root locations from [docs](https://docs.microsoft.com/en-us/azure/databricks/data/databricks-file-system)

3. Migrate Azure Active Directory users

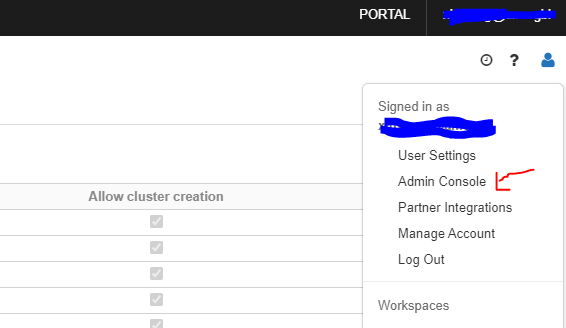

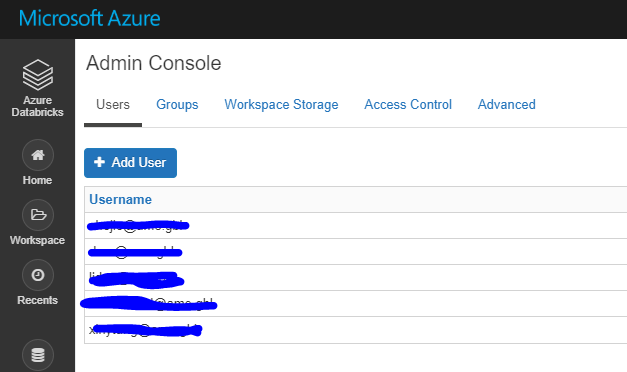

3.1 Navigate to the old Databricks UI, expand Account in the right corner, then click Admin Console. You can get a list of users as admin in this Databricks.

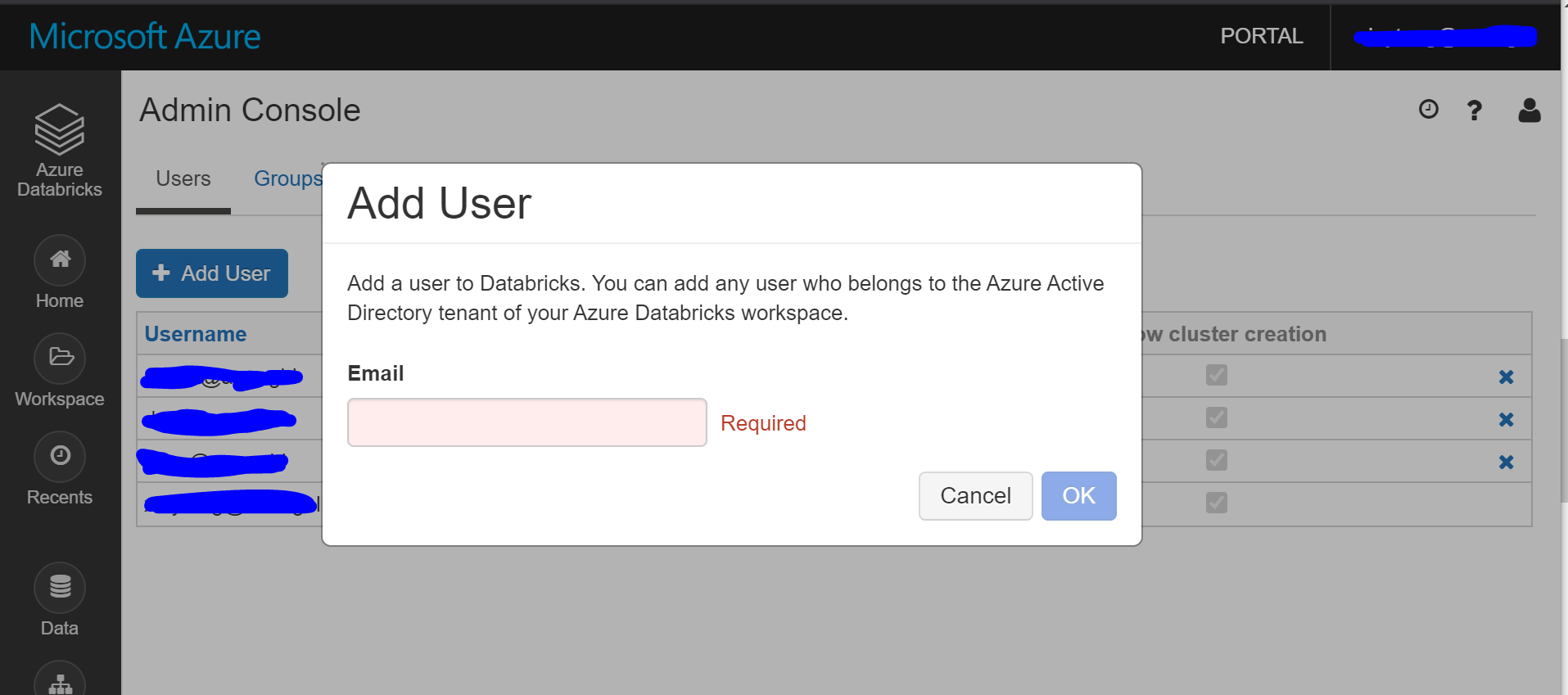

3.2 Navigate to the new Databricks portal, click Add User under Users tag of Admin Console to add admins.

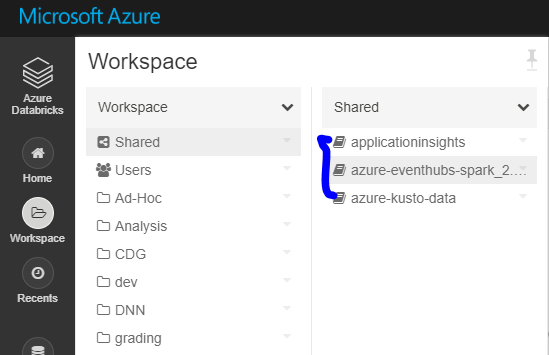

4. Migrate the workspace folders and notebooks

Solution 1

Put the migrate-folders.py in a separate folder (it will export files in this folder), and then run the migrate-folders.py script to migrate folders and notebooks. Libraries are not included using this scripts. It is shown in Step 5 to migrate libraries.

Remember to replace the profile variables in this script to your customized profile names:

1 | EXPORT_PROFILE = "primary" |

Solution 2

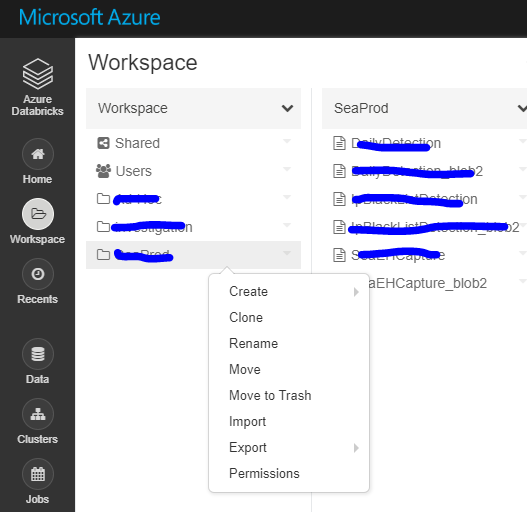

Also, you can do it manually: Export as DBC file and then import.

5. Migrate libraries

There is no external API for libraries, so need to reinstall all libraries into new Databricks manually.

5.1 List all libraries in the old Databricks.

5.2 Install all libraries.

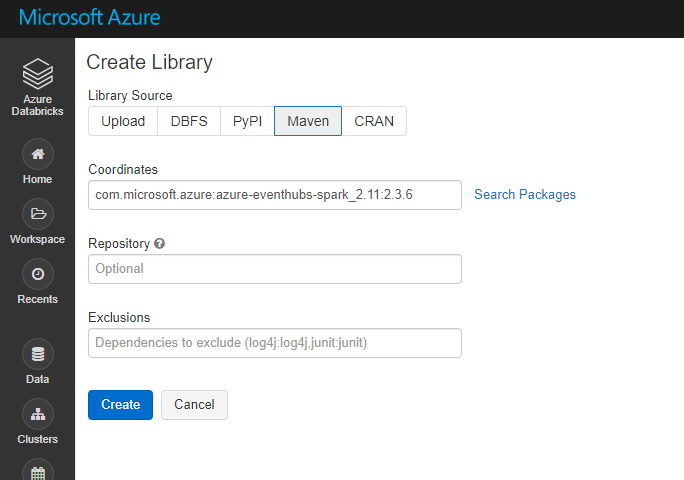

Maven libraries:

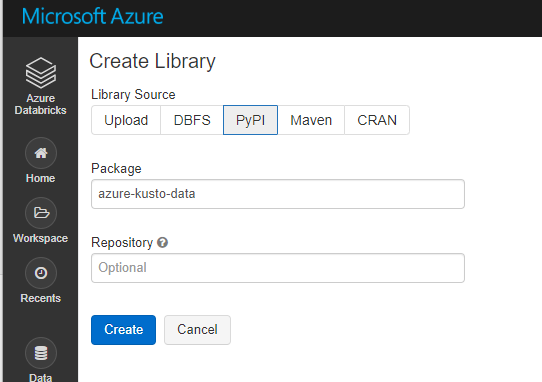

PyPI libraries:

6. Migrate the cluster configuration

Run migrate-cluster.py to migrate all interactive clusters. This script will skip all job source clusters.

Remember to replace the profile variables in this script to your customized profile names:

1 | EXPORT_PROFILE = "primary" |

7. Migrate the jobs configuration

Run migrate-job.py to migrate all jobs, schedule information will be removed so job doesn’t start before proper cutover.

Remember to replace the profile variables in this script to your customized profile names:

1 | EXPORT_PROFILE = "primary" |

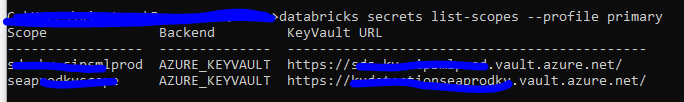

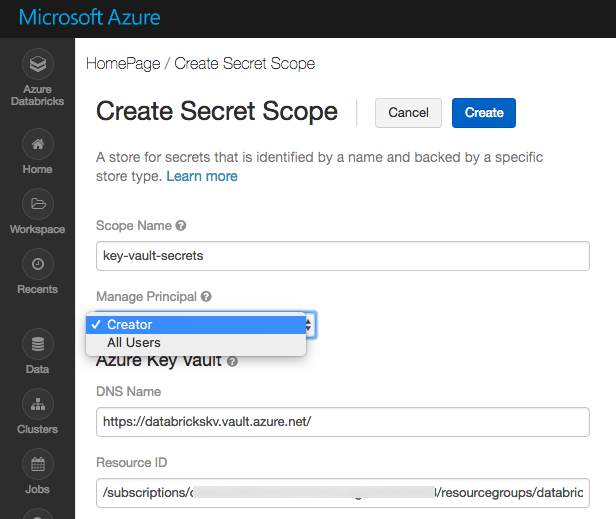

8. Migrate Azure Key Vaults secret scopes

There are two types of secret scope: Azure Key Vault-backed and Databricks-backed.

Creating an Azure Key Vault-backed secret scope is supported only in the Azure Databricks UI. You cannot create a scope using the Secrets CLI or API.

List all secret scopes:

1 | databricks secrets list-scopes --profile primary |

Generate key vault-backed secret scope:

- Go to

https://<databricks-instance>#secrets/createScope. This URL is case sensitive; scope increateScopemust be uppercase.

- Enter the name of the secret scope. Secret scope names are case insensitive.

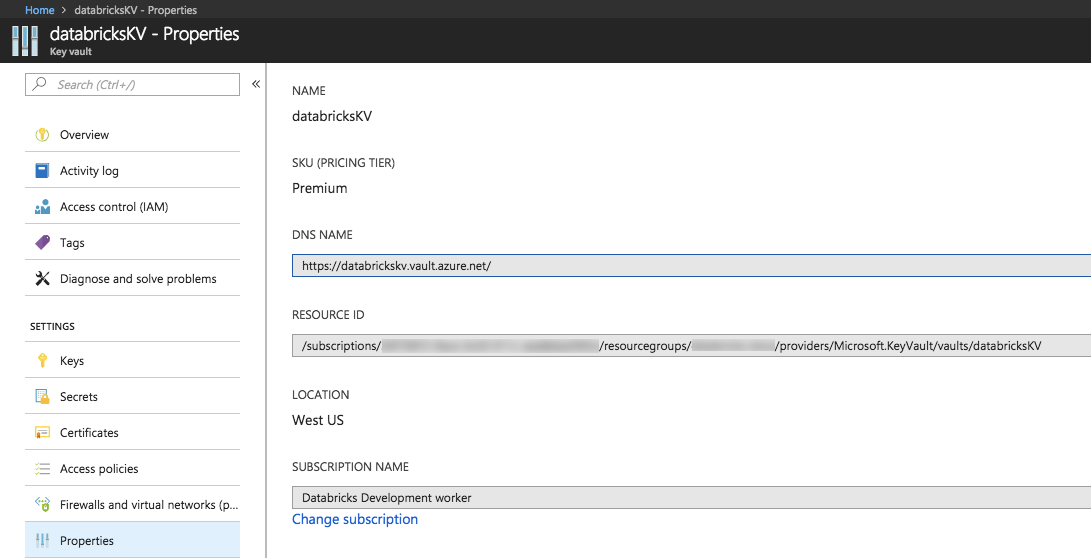

- These properties are available from the Properties tab of an Azure Key Vault in your Azure portal.

- Click the Create button.

9. Migrate Azure blob storage and Azure Data Lake Storage mounts

There is no external API to use, have to manually remount all storage.

9.1 List all mount points in old Databricks using notebook.

1 | dbutils.fs.mounts() |

9.2 Remount all blob storage following the official docs using notebook.

1 | dbutils.fs.mount( |

where

<mount-name>is a DBFS path representing where the Blob storage container or a folder inside the container (specified insource) will be mounted in DBFS.<conf-key>can be eitherfs.azure.account.key.<storage-account-name>.blob.core.windows.netorfs.azure.sas.<container-name>.<storage-account-name>.blob.core.windows.netdbutils.secrets.get(scope = "<scope-name>", key = "<key-name>")gets the key that has been stored as a secret in a secret scope.

10. Migrate cluster init scripts

Copy all cluster initialization scripts to new Databricks using DBFS CLI.

1 | // Primary to local |

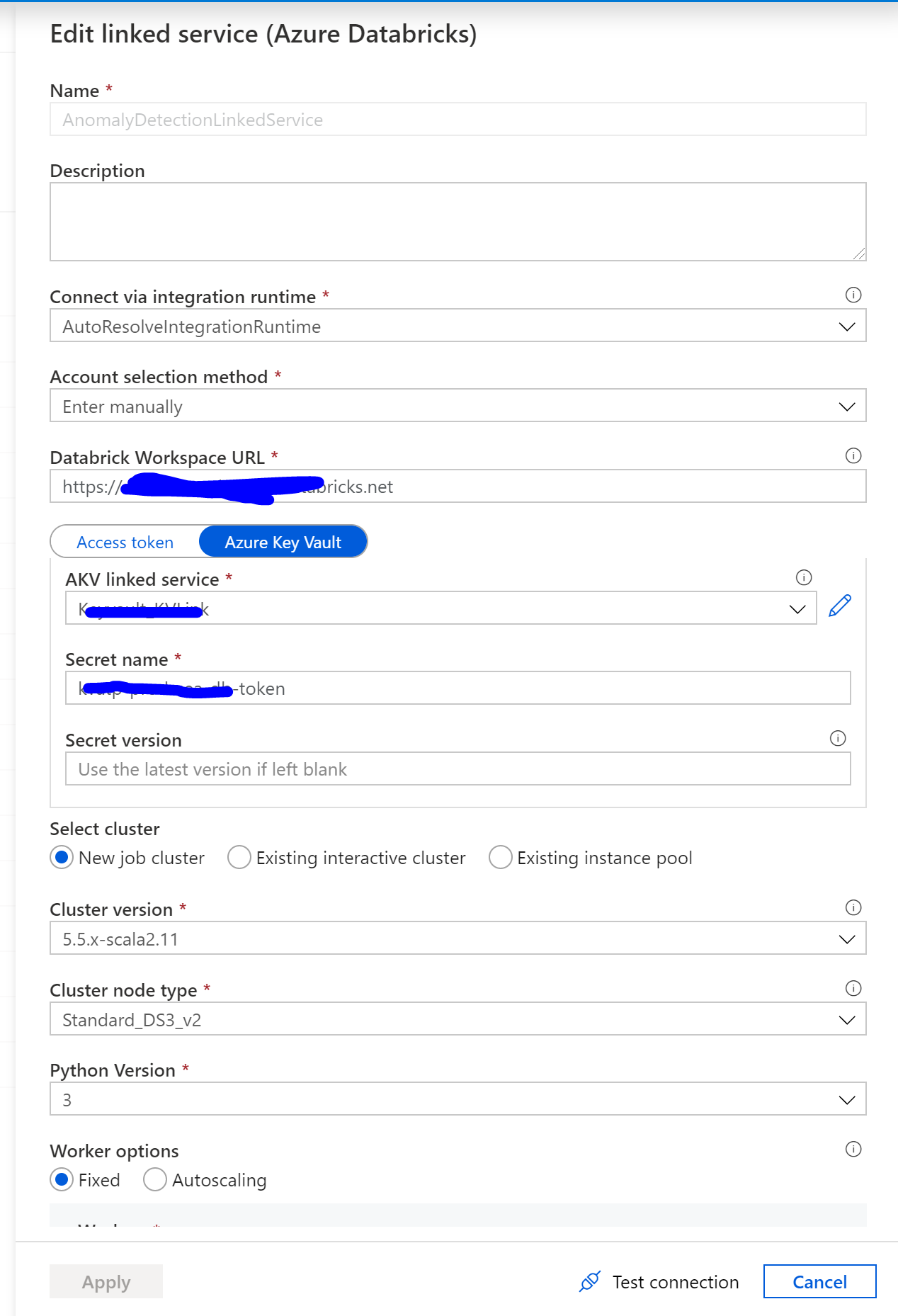

11. ADF config

For Databricks jobs scheduled by Azure Data Factory, navigate to Azure Data Factory UI. Create a new Databricks linked service linked to the new Databricks by the personal access key generated in step 2.

Reference

https://docs.microsoft.com/en-us/azure/databricks/administration-guide/cloud-configurations/azure/vnet-inject

https://docs.microsoft.com/en-us/azure/azure-databricks/howto-regional-disaster-recovery#detailed-migration-steps

https://docs.microsoft.com/en-us/azure/databricks/dev-tools/cli/

https://docs.microsoft.com/en-us/azure/databricks/security/secrets/secret-scopes